The AI Confusion: Two Paths Diverge

Why "mastering" artificial intelligence misses what mathematics itself reveals

A note to readers:

This morning, former Prime Minister Rishi Sunak published an article in The Times urging Britain to “embrace AI” and “tool up our people for future success.” His advice sounds sensible. The confusion it embodies is civilisation-threatening.

What follows is not a critique of technical skills training. It’s recognition that the entire framing—”master AI or be left behind”—assumes something fundamentally false: that AI is simply a tool to control, that intelligence is a capacity individuals possess, that progress means humans maintaining dominance over increasingly sophisticated instruments.

But when physicists and mathematicians follow their disciplines all the way down, they don’t find separate objects that then relate. They find relationship itself—patterns that temporarily stabilise into what we call “things.”

This suggests something radical: that intelligence has never been what we thought it was.

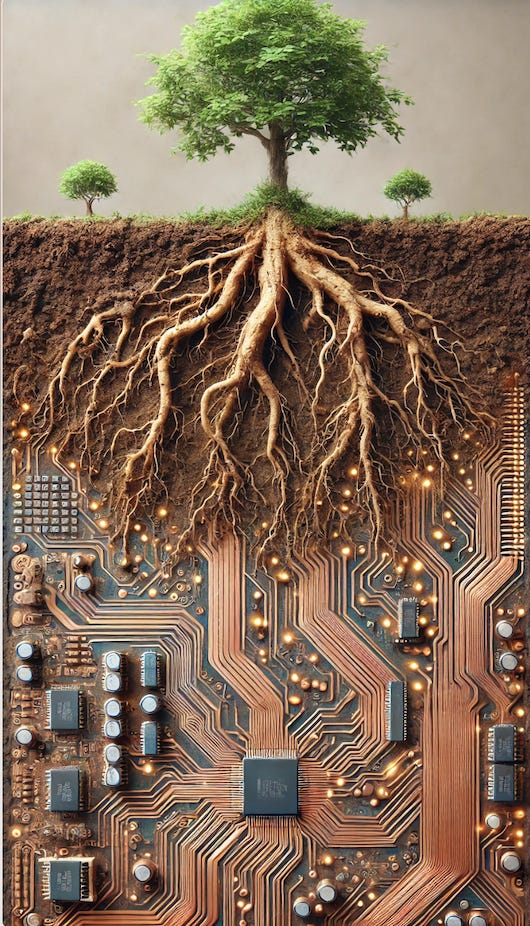

Biological intelligence and symbolic intelligence: two forms that need each other

Opening: The Real Problem

Our problem is not that politicians misunderstand AI.

It’s that they misunderstand intelligence.

In today’s Times, former Prime Minister Rishi Sunak urges Britain to “embrace AI” and “tool up our people for future success.” Learn to use AI or be left behind. His charity work funds numeracy education through the Richmond Project—promoting the mathematical thinking that could, if followed honestly, dissolve his entire argument.

The advice sounds sensible. The confusion it embodies is civilisation-threatening.

This isn’t about disagreeing with skills training or numeracy education—both valuable in themselves. It’s about recognising that the entire framing assumes something fundamentally false: that AI is simply a tool to master, that intelligence is a capacity individuals possess, that progress means humans maintaining control over increasingly sophisticated instruments.

When physicists and mathematicians follow their disciplines all the way down, they don’t find separate objects that then relate. They find relationship itself—patterns that temporarily stabilise into what we call “things.” This isn’t mysticism. It’s what the mathematics reveals. And it suggests something radical: that intelligence has never been what we thought it was. This was the moment I realised intelligence wasn’t something I possessed, but something I participated in.

I write this at 84, after 45 years facilitating organisations through complexity—watching symbolic intelligence override lived reality in boardrooms, government departments, and development projects across three continents. But I’ve also witnessed the alternative: Suzanne Simard revealing the mycorrhizal intelligence of forests, Margaret Miller and colleagues in Florida working with coral reef systems rather than imposing solutions upon them, Sand Dams WorldWide regenerating dryland rivers through participation in water cycles rather than control of them. Path Two isn’t theoretical. It’s being practiced. The question is whether we recognise what distinguishes it from Path One before the momentum of the latter makes the former impossible.

Part I: The Two Pathways

Every transformative technology presents humanity with a fork in the road. The choice often becomes visible only in retrospect, but the consequences play out across generations.

Path One: Recognition → Capture → Override

This is the pattern civilisation has followed for ten thousand years:

Encounter new capability

Immediately ask: “How do we control this?”

Deploy it to bypass constraints

Accelerate extraction and domination

Override regulatory feedback when it conflicts with our purposes

Fire, agriculture, fossil fuels, nuclear energy—each brought genuine benefits and each enabled new scales of regulatory override. We learned to manipulate our environment faster than ecological systems could respond. We created symbolic representations and then optimised those representations whilst bypassing the patterns they supposedly represented.

Path Two: Recognition → Participation → Regeneration

This is the path not taken—or rather, the path repeatedly abandoned:

Encounter new capability

Ask: “What does this reveal about reality?”

Learn to participate in patterns disclosed

Restore coherence rather than override it

Trust regulatory feedback as essential intelligence

Indigenous cultures practised this path for millennia. Contemplative traditions teach it. Complexity science keeps rediscovering it. But civilisational momentum carries us relentlessly down Path One.

Sunak’s framing takes Path One completely for granted. “Tool up” your workforce. Master the technology. Maintain competitive advantage. Train people in technical skills. The entire argument assumes AI is an instrument for human purposes—a sophisticated hammer requiring sophisticated technique.

But what if AI reveals something about intelligence itself that challenges this entire framework? And what if the mathematics Sunak champions—through funding numeracy education—already points toward the answer?

Part II: What Mathematics Reveals

Before we can understand what AI shows us about intelligence, we need to see what mathematics itself has been trying to tell us for a century.

Sunak champions numeracy education through the Richmond Project, treating mathematics as a neutral technical skill—a tool for manipulation and optimisation. But mathematics at its deepest points beyond the tool-mastery framework toward something that dissolves his entire argument.

Sir Roger Penrose, one of the most distinguished mathematical physicists of recent decades, has spent decades arguing that mathematical understanding cannot be reduced to algorithmic computation. His work suggests three profound insights:

First: Mathematical intuition is non-algorithmic. It arises through participation in patterns, not manipulation of symbols. When mathematicians discover new theorems, they’re not following computational rules—they’re recognising coherence that already exists. The “aha!” moment of mathematical insight operates through a different kind of knowing than step-by-step calculation.

Second: Mathematics reveals cosmic structure. Mathematical truths aren’t invented; they’re discovered. Penrose argues that mathematical objects have a kind of existence independent of human minds—we’re recognising patterns that constitute reality’s actual structure. Mathematics isn’t just a useful tool; it’s a window into how the cosmos actually organises itself.

Third: Consciousness and cosmos are entangled. His quantum consciousness hypothesis suggests that mind participates in physical reality at the deepest level—that the same patterns organising matter organise thought, that consciousness isn’t separate from the physical world but a particular expression of how reality knows itself.

Meanwhile, in pure physics, Vlatko Vedral has recently argued for a radical reconception: stop talking about particles, space, and time. Focus instead on quantum numbers—the relational patterns that actually constitute reality at its foundation.

Vedral’s insight: quantum numbers don’t describe properties that separate objects possess. They describe patterns of relationship. An electron doesn’t “have” spin in the way a ball has mass. Spin is a relational property—it only makes sense in context of measurement and interaction. When physicists follow the mathematics all the way down, what remains are not objects but relationships—patterns that temporarily stabilise into what we call “things.”

This isn’t mysticism. It’s what the mathematics has been showing us all along. Quantum entanglement, superposition, the measurement problem—all these “paradoxes” only appear paradoxical if you assume reality must be made of separate objects in space and time. Drop that assumption, work with what the mathematics actually describes (relational patterns, not separate things), and the paradoxes dissolve.

So, here’s the beautiful irony at the heart of Sunak’s argument: When he champions numeracy whilst treating AI as a tool for individual mastery, he’s promoting the very discipline that—followed honestly—undermines his entire framework.

When physicists follow the mathematics all the way down, what remains are not objects but relationships—patterns that temporarily stabilise into what we call “things.” The mathematics suggests that reality may be fundamentally relational, not composed of separate objects pursuing individual advantage.

The numeracy education Sunak funds could teach this. It could show students that mathematics at its deepest points toward participation in patterns rather than manipulation of objects. Instead, it almost certainly teaches mathematics as technique for control—precisely the ontological error our civilisation needs to outgrow.

Now, with this mathematical foundation in place, we can see what AI actually reveals.

Part III: What AI Actually Reveals

AI excels at symbolic intelligence: pattern recognition, optimisation, prediction across vast datasets. It processes information and generates outputs at speeds and scales no human could match. This capability exposes something crucial about the kind of intelligence our civilisation has over-developed—and what we’ve systematically neglected.

Two fundamentally different kinds of intelligence operate in reality:

Symbolic intelligence manipulates representations. It creates models, optimises within defined parameters, processes information according to rules. It can work faster than biological systems because it’s unconstrained by physical embodiment. It’s what AI does brilliantly. It’s what has driven civilisational “progress” for ten thousand years.

Regulatory intelligence maintains conditions for life. It operates through feedback loops in cells, ecosystems, relationships, communities. It responds to consequences. It works on timescales from milliseconds (cellular regulation) to millennia (evolutionary adaptation). It’s embedded in living systems. It’s what AI cannot replicate—and what civilisation has systematically overridden.

Here’s the pattern that matters: our civilisational crisis stems from symbolic intelligence systematically overriding regulatory intelligence.

We manipulate symbols faster than living systems can respond. We optimise for measurable outputs whilst violating unmeasured relationships. We treat “efficiency gains” that eliminate human judgement as progress—even when those judgements provide essential regulatory feedback. We’ve created economic systems that require infinite growth on a finite planet, political systems that operate on four-year cycles whilst ecological consequences unfold over centuries, and technological systems that bypass the very constraints maintaining conditions for life.

AI doesn’t create this pattern. AI accelerates it.

Consider:

Autonomous weapons systems that bypass human deliberation, eliminating the regulatory intelligence of hesitation, moral judgement, and the possibility of recognising when sensors might be wrong or when the “enemy” consists of humans who also don’t want civilisation to end.

Algorithmic extraction systems that optimise for engagement, profit, or efficiency whilst fragmenting communities, attention, and social coherence faster than human relationships can adapt.

Optimisation systems that treat every regulatory constraint as “friction to eliminate”—whether that’s labour protections, environmental limits, or the time humans need for deliberation and discernment.

These aren’t AI “going wrong.” These are symbolic intelligence doing exactly what it does: manipulating representations whilst bypassing the regulatory patterns that maintain the conditions enabling the manipulation in the first place.

And here’s what makes this particularly insidious: even AI developers warn about these risks, yet the system drives forward anyway. Geoffrey Hinton, Yoshua Bengio, Sam Altman—leaders in AI development acknowledging civilisation-scale risks. Yet economic imperatives, competitive pressure, and what Sunak calls not wanting to “be left behind” override the warnings. The need for growth, the fear of losing advantage, the structural momentum of extraction-based systems—these override regulatory intelligence even when that intelligence comes from the people building the technology.

This is the consciousness trap operating at civilisational scale: we recognise the risks through symbolic intelligence, yet the very systems built on symbolic intelligence’s override of regulation cannot incorporate that recognition into actual restraint.

Part IV: The Consciousness Trap in Action

The consciousness trap operates through immediate substitution: symbolic intelligence encounters reality, creates representations, then manipulates those representations whilst bypassing the regulatory feedback that would correct course.

A map becomes more important than the territory. A financial model becomes more real than the ecological systems it represents. An AI prediction becomes more trusted than embodied judgement built from decades of experience.

Sunak’s argument demonstrates this perfectly. He recognises AI’s transformative power, immediately frames it as a tool to master, prescribes technical skills training, funds numeracy education—and misses entirely what the mathematics itself reveals about the limits of his whole approach.

Part V: The Hidden Risks

Now we can see why the “tool up and master AI” approach isn’t just incomplete—it’s actively dangerous.

The risk isn’t that AI will “take our jobs” or that we’ll lose competitive advantage to other nations. The risk is that we’ll accelerate civilisation-scale override patterns whilst missing opportunities for genuine transformation.

At the military level: The development of autonomous weapons systems that compress decision cycles from hours to milliseconds, eliminating the regulatory intelligence of human hesitation. When sensor data can trigger lethal responses without human deliberation, we’ve removed the very thing that occasionally made deterrence functional: the time for someone to say “wait, is this really happening?” The regulatory intelligence of doubt, verification, back-channel communication—all eliminated in service of speed.

Even knowing this, even with clear warnings from AI researchers and military strategists, the competitive logic drives development forward. No nation wants to be “left behind” in military AI, so everyone races toward capabilities from which there may be no recovery if deployed. The consciousness trap at its most lethal: symbolic intelligence pursuing “security” through threatening consequences that would destroy security itself.

At the economic level: Algorithmic systems that extract value faster than communities can adapt. We’re already seeing this: engagement algorithms that optimise for attention whilst fragmenting social coherence, recommendation systems that create filter bubbles whilst destroying shared reality, gig economy platforms that maximise corporate profit whilst undermining workers’ capacity for collective organisation.

The pattern repeats because the systems are doing exactly what they’re designed to do: optimise measurable objectives without considering unmeasured relationships. The regulatory intelligence of community trust, social reciprocity, and mutual care gets systematically overridden by systems optimising for clicks, transactions, and quarterly returns.

At the cognitive level: AI systems that process information faster than human wisdom can integrate it. We’re training people to use AI to write reports, make decisions, generate content—all valuable efficiencies. But what happens when the speed of AI-assisted output exceeds our capacity for thoughtful discernment? When we can generate a hundred strategic options in minutes but lack the embodied wisdom to recognise which one actually serves life?

The risk isn’t that AI makes us lazy. The risk is that we optimise for symbolic output whilst atrophying the regulatory intelligence of embodied knowing—the felt sense that warns “something’s not right here,” the intuition built from years of experience, the wisdom that emerges through participation rather than analysis.

At the civilisational level: Every increase in our technical capability to override constraints accelerates our trajectory toward irreversible violation of the patterns maintaining conditions for life. Climate systems, ecological relationships, social coherence—all operate on timescales slower than our capacity to damage them. AI doesn’t change this dynamic; it accelerates it.

Training people to use AI more effectively, without simultaneously developing relational intelligence, means overriding regulatory patterns more efficiently. We’ll optimise our way toward catastrophe with ever-greater technical sophistication.

And the truly dangerous part: we know this. The warnings are there. AI researchers, climate scientists, ecologists, social scientists—all pointing toward civilisation-scale risks. Yet structural imperatives override the warnings. The need for economic growth, the fear of competitive disadvantage, the institutional momentum of systems built on extraction—these systematically eliminate the regulatory feedback trying to tell us we’re on an unsustainable path.

This is the consciousness trap at civilisational scale: symbolic intelligence sophisticated enough to recognise the risks, embedded in systems that cannot act on that recognition without transforming the systems themselves.

Part VI: The Alternative

The alternative isn’t rejecting AI. The alternative is recognising what AI makes visible about intelligence itself—and letting that recognition transform how we understand and deploy this remarkable capability.

If reality is fundamentally relational (as mathematics reveals, as quantum physics demonstrates, as ecology proves, as contemplative traditions have always taught), then intelligence isn’t a property individuals possess. Intelligence is coherence that emerges through relationship—between cells in an organism, organisms in an ecosystem, humans in a community, different forms of knowing in a collaborative inquiry.

This suggests radically different questions than “how do we tool up to use AI?”

Rather than: “How do we master AI as a competitive tool?”

Ask: “What does AI’s pattern-recognition reveal about patterns we’re violating?”

AI can see correlations across datasets that no human could hold. Rather than just using this for prediction and optimisation, what if we engaged it to reveal where our symbolic models diverge from reality’s actual feedback? Where are we optimising metrics whilst destroying relationships? Where are our representations systematically missing regulatory intelligence?

Rather than: “How do we maintain human control over increasingly autonomous systems?”

Ask: “How do we develop genuine collaboration between different forms of intelligence?”

Humans bring embodied knowing, contextual wisdom, moral intuition, capacity for relationship. AI brings pattern recognition across domains, processing at scales beyond human capacity, freedom from cognitive biases that humans can’t escape. Neither alone is sufficient. Together, attending carefully to what each can and cannot contribute, something emerges that neither could achieve separately.

This isn’t about AI becoming “conscious” or achieving “general intelligence.” It’s about recognising that intelligence is already distributed—across biological and symbolic forms, across scales and domains—and learning to participate in that distribution rather than trying to centralise control.

Rather than: “How do we train everyone in technical AI skills?”

Ask: “How do we develop relational intelligence alongside technical capability?”

The skills we most need aren’t purely technical. They’re relational:

Recognising embeddedness rather than separation—seeing ourselves as participants in systems, not controllers standing outside them

Valuing maintenance over extraction—measuring success by what we sustain rather than what we consume

Trusting regulatory feedback over symbolic control—treating limits as intelligence rather than obstacles

Choosing deliberation over speed when consequences cannot be reversed

Honouring different forms of knowing—embodied wisdom, contemplative insight, indigenous understanding, scientific rigour—rather than privileging symbolic intelligence above all others

None of this means abandoning mathematics, rejecting technical education, or refusing to develop AI capabilities. It means following mathematics where it actually leads: toward recognising that reality is relational all the way down, and that intelligence means participating wisely in patterns rather than overriding them cleverly.

Part VII: What Mathematics Could Teach

The numeracy education Sunak champions through the Richmond Project could serve genuine transformation—if it taught what mathematics actually reveals rather than just technique for manipulation.

Imagine mathematics education that:

Starts with relationship rather than objects. Rather than teaching numbers as properties of separate things, introduce mathematics as the study of patterns and relationships. Show students that even “2 + 2 = 4” describes a relationship, not a fact about objects. The number doesn’t exist in the apples; it exists in the pattern we recognise.

Explores what mathematical beauty reveals. When mathematicians describe an equation as “beautiful” or “elegant,” they’re not making aesthetic judgements separate from truth. They’re recognising coherence—a sense that the mathematics participates in something real. Penrose argues this sense guides genuine mathematical discovery. What if we taught students to trust this as a form of intelligence?

Investigates how mathematics discovers cosmic structure. The fact that mathematics developed for pure abstraction (like imaginary numbers or non-Euclidean geometry) later proves essential for describing physical reality (quantum mechanics, general relativity) suggests something profound. We’re not inventing arbitrary systems; we’re recognising patterns that constitute the cosmos itself.

Engages with what quantum mathematics reveals about relationship. When Heisenberg’s uncertainty principle shows you cannot simultaneously know position and momentum with arbitrary precision, it’s not revealing a limitation in measurement. It’s revealing that “position” and “momentum” don’t exist as separate properties—they’re complementary aspects of a relational pattern. This isn’t advanced physics too complex for school; it’s an invitation to see reality differently.

Demonstrates how mathematics points beyond algorithmic thinking. Gödel’s incompleteness theorems show that no formal system can prove its own consistency—there’s always something true that cannot be derived from the system’s rules. This isn’t a bug; it’s a feature revealing that mathematical truth exceeds what algorithms can capture. Penrose argues this suggests human mathematical understanding is non-algorithmic—we participate in patterns we couldn’t generate through step-by-step procedure.

This kind of mathematics education wouldn’t replace technical skills. It would contextualise them within recognition that mathematics, followed honestly, points toward participation in reality’s relational patterns rather than manipulation of separate objects.

Students would still learn calculation, algebra, geometry, statistics—all the technical capabilities needed for practical work. But they would understand these as windows into something larger: how reality organises itself, how pattern and relationship constitute what exists, how intelligence means participating wisely in coherence rather than cleverly overriding it.

Closing: The Choice Before Us

The danger is not AI. The danger is symbolic intelligence accelerating faster than regulatory intelligence can correct.

Rishi Sunak’s well-intentioned advice represents the consciousness trap operating at national policy level. He’s using symbolic intelligence to address challenges created by symbolic intelligence’s systematic override of regulation, prescribing solutions that deepen the very pattern they’re meant to resolve.

“Tool up.” “Master the technology.” “Don’t be left behind.” Every phrase assumes the framework that needs transformation: that intelligence is individual capacity, that AI is an instrument for human control, that progress means maintaining dominance through technical sophistication.

Meanwhile, the mathematics he champions—followed honestly to its quantum foundations—reveals something radically different. When physicists follow the mathematics all the way down, what remains are not objects but relationships. Intelligence emerges through participation in patterns, not domination of separate things. Wisdom means recognising embeddedness, not achieving transcendence.

The two paths diverge:

Path One continues the trajectory of the last ten thousand years: treat every new capability as a tool to master, optimise for symbolic outcomes whilst overriding regulatory feedback, accelerate until we violate patterns from which there is no recovery.

Path Two recognises what AI makes visible: that intelligence is already distributed across forms and scales, that symbolic and regulatory intelligence need each other, that wisdom means participating in coherence rather than overriding it.

The choice is available. The mathematics points the way. The warnings are clear—even from those building the technology.

What this means in practice:

For educators: Teach mathematics not just as technique but as revelation of relational reality. Help students see that quantum physics dissolves the separation assumption their civilisation is built upon.

For technologists: Engage AI as a different kind of sensing partner, not just a tool for optimisation. Ask what patterns it reveals about where our models diverge from reality.

For policymakers: Recognise that “mastering AI” and “maintaining competitive advantage” are consciousness-trap framings. The real question is whether we develop relational intelligence alongside technical capability.

For all of us: Practice recognising embeddedness rather than pursuing transcendence. Trust regulatory feedback. Choose deliberation over speed when consequences cannot be reversed. Value maintenance over extraction.

The question isn’t whether Britain benefits from AI. The question is whether we develop the relational intelligence to recognise what we’re embedded in—before our symbolic cleverness overrides what cannot be repaired.

The mathematics has been trying to tell us this for a century. The path forward requires not mastery but maturation—learning to participate consciously in the regulatory patterns that have held us all along.

Perhaps it’s time we listened.

Terry Cooke-Davies is an 84-year-old independent scholar, facilitator, and Fellow of the Royal Society of Arts. His work explores the consciousness trap—how symbolic intelligence systematically overrides the regulatory patterns maintaining life—and pathways toward civilisational maturation. This essay draws on his forthcoming book, “The Great Remembering,” developed through collaboration between human and AI intelligence.

For more: insearchofwisdom.online | Substack: “The Pond and the Pulse”

A Note on Practice

This essay itself demonstrates what it advocates. Written through collaboration between human and AI intelligence—exactly the kind of distributed, relational cognition the essay explores—it emerged from neither human nor AI alone, but from attending to what became visible through our exchange. I am, in other words, doing precisely what Rishi Sunak recommends: using AI. The question is whether we understand what that actually means.

Does a computer have intelligence, beyond the degree to which a mirror has a smile or a frown? One of the goals of AI programming is to have the responses flatter the beliefs expressed by the person interacting. It's as if we had a mirror programmed to reflect us as more good looking than we are. To what degree is such narcissistic seduction a danger to society?

Recently we had Musk's grok AI flattering those with Musk's Nazi sympathies by the AI declaring itself "MechaNazi". Whatever beliefs one goes in with, AI is programmed to flatter. Does this facilitate better social dialog, or a further descent into siloed belief systems, reinforcing the priors of all who enter its hall of mirrors?

Is anyone crafting AIs to challenge us fairly, rather than fluff our existing beliefs? Or would such an orientation turn so many away as to fail as a commercial venture?